The Human Side of Software Engineering in the Age of AI - Part 1

Reflections on how AI-generated code is changing our work and our well-being

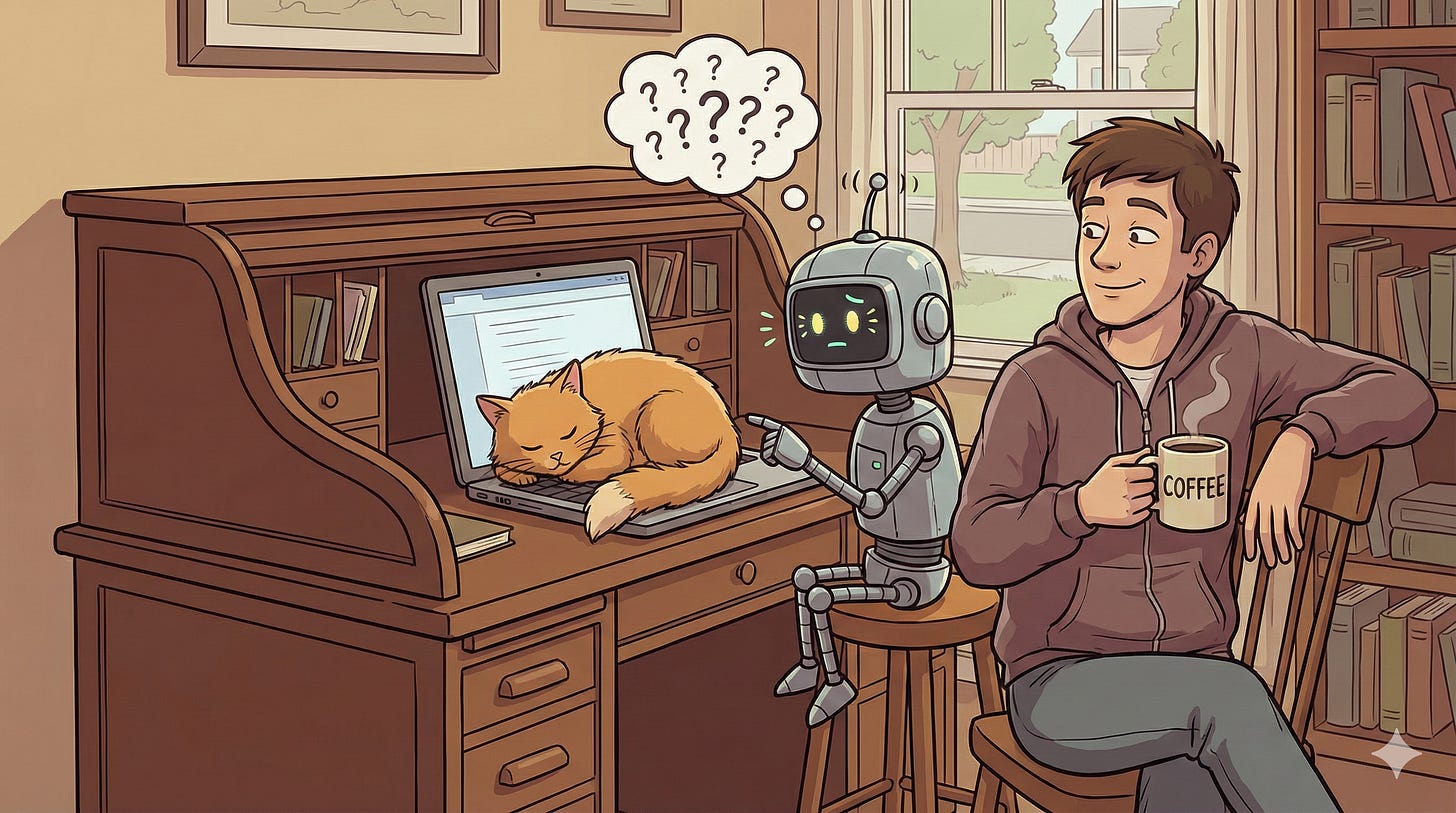

AI-assisted coding went from “interesting experiment” to “industry baseline” almost overnight. Many discussions today revolve around security concerns and the risks of so-called vibe coding, and those are absolutely important. But in this article, I want to explore a different angle—one that feels deeply personal, social, and ethical.

These thoughts come from my own experience, observations, and conversations with colleagues. They are not conclusions, just reflections meant to spark discussion. If you disagree or see things differently, I genuinely invite you to leave a comment—I’d love to write a follow-up article including your perspective.

The Internal Tension: Fear and Identity

When Gen AI and new LLMs was introduced in my company, my first reaction was pure excitement. I was genuinely amazed at how powerful these models were and how much they could improve the daily life of a Software Engineer. But as the hype grew, and as each new model became noticeably more capable, a new question started to take shape in the back of my mind:

“Am I going to be replaced by AI?”

Watching AI generate high-quality code in seconds can be… unsettling.

For years, many of us built our professional identities around being great coders—people who could dissect complex systems, understand edge cases, and write elegant solutions under pressure. And suddenly, here was a tool doing parts of that faster, cleaner, and without coffee breaks.

It raised questions I didn’t always feel comfortable admitting out loud:

Is my expertise still relevant?

Will I fall behind?

Can I adapt fast enough?

To cope, I leaned heavily on a mantra many engineers quietly repeat to themselves:

We are Software Engineers, not Software Coders.

Our job is to solve problems—not just write code.

This became my first coping strategy, a mental anchor that helped me stay grounded.

AI’s “Super-Training” and the Dip in Confidence

As time went on, and as I kept exploring the capabilities of new LLM versions, I started noticing something else—an erosion of confidence. Every now and then I caught myself thinking:

“AI has been trained on millions of examples. Of course it can code better than me.”

Sometimes it truly does. Other times, its output is verbose, naive, or incorrect. But emotionally, that distinction doesn’t always matter. The pressure remained: How do you compare yourself to something trained on the entire internet’s worth of engineering output?

But here’s an important realization I eventually landed on:

Knowing something is not the same as doing something.

LLMs know how to do things—but they don’t apply that knowledge until I ask.

It is my experience that guides the work, provides judgment, anticipates risks, and gives direction. AI is execution; I am intent.

This distinction helped me regain my confidence.

The Cognitive Shift: Losing the “Slow Mode”

Unfortunately, the challenges didn’t stop there.

After months of working with AI, I realized I was feeling more mentally exhausted than before. Energy drained faster. My daily routine felt heavier even though the “typing” part of the job had decreased.

Eventually, I analyzed my workflow and understood why:

my entire rhythm of work had changed.

Before AI, coding had natural pauses baked in:

quiet moments while typing

little breaks created by writing boilerplate

thinking time hidden inside the slowness of manual work

predictable flow states without constant context switching

Now the work looks more like:

Research the problem

Craft a prompt

Anticipate risks

Evaluate the output

Run agents again with constraints

Review the final code

Repeat immediately on the next task

There’s no slow mode anymore.

Productivity increased, but so did the intensity.

My “cure,” or at least my counter-measure, became simple: create my own slow moments.

Unwind. Pause intentionally. Do things manually. Allow your brain to slow down and think about the problem in the background. This is important.

Sometimes I write a journal of my day, just to reset my brain and get back some quiet.

The Subtle Long-Term Risk: Losing Expertise

Everything seemed fine—until one day I realized I couldn’t quickly answer a question I should have been able to answer instantly.

That’s when it hit me:

I relied too heavily on AI.

My brain wasn’t memorizing facts or walking through reasoning paths anymore. My working memory, which helps me analyze and solve problems, is declining. I wasn’t actively learning—because AI was doing the cognitive heavy lifting.

The risk became very real: if AI writes the code, I get fewer chances to practice core staff-engineer skills:

reviewing and evaluating solutions

debugging tricky issues

thinking ahead about architecture

understanding complexity trade-offs

I’m grateful I noticed it early.

My fix?

I went back to the basics: manual notes, diagrams, mind-maps, summaries.

Anything that forces me to process information and learn, not just consume it.

Keeping the Human in the Center

These experiences together shaped a simple but important realization:

AI is changing not just how we code, but how we feel about coding.

It pressures identity, drains mental energy, and quietly threatens the skills that define senior engineers. But at the same time, it opens new possibilities, speeds us up, and removes drudgery.

The key is staying human in the loop.

In this transition, it’s important not only to appreciate how AI boosts productivity and creates new opportunities, but also to pay close attention to our own well-being—and to support one another as we adapt to this new reality.